Docker Compose

A production deployment requires a working database. Docker Compose can set up both the database and application in one step.

A production deployment requires a working database. Docker Compose can set up both the database and application in one step.

Last time we hardened the application, allowing it to be run in develepment, test, or production modes, however, this broke the existing docker build. There were a number of reasons for it not to work and these need attention before digging into docker compose.

In this post I will first repair the broken dockerfile using a SQLite database, then introduce docker compose, and use it to create a separate MySQL server along with the application server.

Missing requirements

Docker allows you to access the logs from a running server by using the command:

docker logs estimator-server

Unfortunately, after rebuilding the estimator application from the dockerfile, the application will not start. I forgot to add the new Python requirements to the prod_requirements file.

Adding the database required the addition of SQLAlchemy to the virtualenv that we were developing the environment into. The following lines should get things back up and running:

Flask-SQLAlchemy==2.3.2

SQLAlchemy==1.2.14

Ignoring files

When debugging, I also noticed that a lot of files were being copied to the docker server that are not required, including the dev version of the SQLite database.

We can tell Docker not to include certain files by adding a .dockerignore to the project root folder. This is what I excluded:

data.sqlite

.git

.gitignore

.pytest_cache

**/__pycache__/

**/*.pyc

.dockerignore

Dockerfile

requirements.txt

Configure SQLite in the Dockerfile

We need to add an environment variable to the dockerfile so that the default profile is used when the application is created. This will get it up and running again.

ENV FLASK_CONFIG=default

This is going to create a SQLite database file using the same approach for development. Not how we want production to work, but good enough for now.

Initialise the Database

The database must be initialised before it can be used. After switching to create the app with the create_app function in the Flask Blueprints I never changed the dbinit.py script to use the new function. This is what it needs to look like:

from estimator import db, create_app

from database.models import *

import os

app = create_app(os.getenv('FLASK_CONFIG') or 'default')

with app.app_context():

db.create_all()

I will remove and rebuild the docker image and server using the new setup with the following commands:

docker stop estimator-server

docker rm estimator-server

docker build -t estimator-app .

docker run -d -p 5000:5000 --name estimator-server estimator-app

To check that the correct files have now been copied to the server, I can connect a session to the running server as follows and from there run commands such as ls.

docker exec -it estimator-server bash

Exit with the command exit or Ctrl-D.

To initialise the database, first make sure that the server running and then run this Python script against the server. This needs to be done once each time the server is built.

docker exec -i estimator-server sh -c 'python dbinit.py'

Now the server will respond to GET and POST responses like it did before. See Flask and Docker for some examples.

Compose

So that’s fine. The docker server is working again and now has a local SQLite database behind the application, but that is not really a permanent solution. What we want is to have the application’s database running on a separate server, or in this case, a separate Docker container.

Docker provides a means of orchestrating multiple containers with one command using the docker-compose utility. It will bring up all the containers necessary in the environment with a single command.

Add the yml

This is my first time playing around with docker-compose so the aim is to simply get it working. There are some obvious things that I am doing wrong and should not be copied in a production environment, such as the rudimentary password exposed in the following file.

To start with we need a docker-compose.yml file. YML is similar to JSON (or even properties files) holding configuration, but does it using indentation to nest levels. For example, build is within services.web in the following example.

version: '2'

services:

web:

build: .

ports:

- "5000:5000"

volumes:

- /opt/estimator

links:

- db:mysql

container_name: estimator-app-web

depends_on:

- db

environment:

ESTIMATOR_PASSWORD: rootpass

ESTIMATOR_USER: root

ESTIMATOR_DATABASE: estimator

ESTIMATOR_DB_HOST: mysql

db:

image: mysql:5.7

restart: always

container_name: estimator-app-db

environment:

MYSQL_ROOT_PASSWORD: rootpass

MYSQL_DATABASE: estimator

Two servers are defined, one beginning on line 3 for the application server, and the second on line 19 for the database.

Each container is named appropriately. The web container is told to build with the Dockerfile located in the same folder with the build: . command. Note that is has a dependency on the db container, which ensures that the database is available when it is started.

Similar to running the application on a single container with docker run where we specified that port 5000 should be opened up, on line 6 the port is exposed to allow connections to be passed through from my laptop to the container.

Configuration Changes

We left the production configuration settings incomplete above, starting the application with the default settings that use the local SQLite database.

First thing to do is to change the Dockerfile so that it adds the production environment variable:

ENV FLASK_CONFIG=production

Then we need to add configuration so that the application can connect to the database. We added the various parts of the connection URI as environment variables, and these can be accessed by importing os and using it to retrieve the values.

As mentioned in an earlier post, SQLALCHEMY_DATABASE_URI is used by SQLAlchemy to connect to the database.

class ProductionConfig(Config):

DB_USERNAME = os.getenv('ESTIMATOR_USER')

DB_PASSWORD = os.getenv('ESTIMATOR_PASSWORD')

DB_HOST = os.getenv('ESTIMATOR_DB_HOST')

DATABASE_NAME = os.getenv('ESTIMATOR_DATABASE')

SQLALCHEMY_DATABASE_URI = 'mysql+pymysql://%s:%s@%s/%s' % (DB_USERNAME, DB_PASSWORD, DB_HOST, DATABASE_NAME)

Add Pymysql

The development environment does not require a MySql database, but the production server does. Even still, I used pip install mysql in the my virtualenv as it seems to select the correct version to use, one compatible with the existing versions.

Freezing the requirements into requirements.txt, I then added just the following line to the prod_requirements.txt.

PyMySQL==0.9.2

Fix the Model

Unfortunately when I ran this for the first time, it gave the following error.

sqlalchemy.exc.CompileError: (in table 'group', column 'name'): VARCHAR requires a length on dialect mysql

It appears that SQLite is more forgiving of incomplete models than MySql. I needed to add a size to the string property in the table:

name = db.Column(db.String(32))

Build and Start

It’s straightforward to build the images:

docker-compose build

There may be a slight delay the first time this runs as it downloads various components. Assuming there are no issues, the composition can be brought up with:

docker-compose up

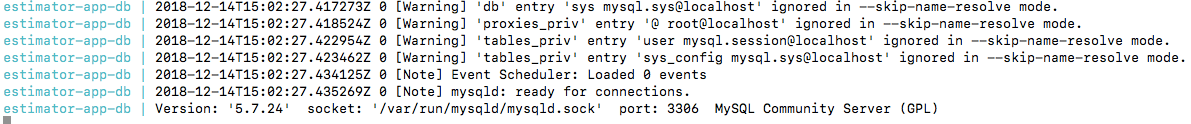

The command starts the servers up with the logs spilling out to screen so it should be possible to see both the application and database starting up.

The MySql database will show additional log lines; it should state that it is running and can take new connections.

Stopping the containers can be done by pressing Ctrl-C and waiting for the servers to shut down gracefully.

To start the containers in daemon mode use docker-compose start. This will not show any logs on screen. You can stop them with docker-compose stop no matter which method was used to start.

Changing Code

I had to rebuild a few times before getting everything working the way I wanted it to, and found that some changes were not applied to the server while others were. For examlpe, adding the PyMySql dependency to the prod_requirements.txt immediately worked, while any changes to Python files were not reflected on the newly built container.

To resolve this problem I had to remove the application container before rebuilding:

docker rm estimator-app-web

docker-container build

Not ideal, but subsequent builds are faster than the initial one.

Initialise and Check the Database

There is no real difference to initialising the database from above. Make sure the containers are running and use the terminal to run:

docker exec -it estimator-app-web python dbinit.py

Since it is now a MySql database, you can connect and run queries against it from the command line using the following:

docker exec -it estimator-app-db mysql -uroot -prootpass

For example, to check that the table we created is in the database, use the following:

use estimator

show tables;

+---------------------+

| Tables_in_estimator |

+---------------------+

| group |

+---------------------+

1 row in set (0.00 sec)

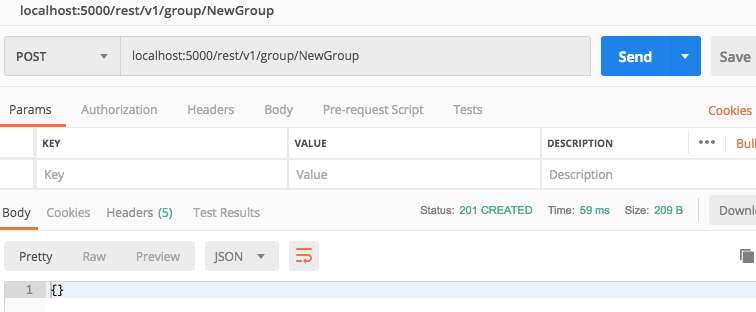

Use Postman

Now that the application is up and running, I was able to use Postman to create a new group

Query Database

With a terminal connection to the database still open, verify that the row has been inserted with the following.

mysql> select * from estimator.group;

+----+----------+

| id | name |

+----+----------+

| 1 | NewGroup |

+----+----------+

1 row in set (0.00 sec)

Conclusion

That about wraps up the post. The database is now working in a standalone container. Docker-compose was easy to use and get working.

There are some elements of this code that are unfinished, for example, holding the password for the MySql database in source code is wrong. I will deal with this before moving to a real container on the cloud.

There is a lot more to do in the mean time. While the application allows someone to create a new group, that is just about all it can do. In the next post we will return to adding more functionality.

I am new to Docker and Flask, and this series of posts is looking at these technologies from a beginners point of view. Please send any comments or corrections to [email protected].

If you are interested in trying out the code in this example, you can access the code on Github here: https://github.com/rkie/estimator